When I was riding the waves of IRC as a kid in the mid-00s, I discovered a small community centered around a then-obscure game engine named ZZT. Fifteen years later, I decided to embark on a journey to reconstruct its missing source code. The end result was the Reconstruction of ZZT - a source tree whose build scripts create a ZZT.EXE byte-for-byte identical to the final official release. This article serves as a clarification of the context and a recollection of the methods in which I got there.

The article is split into three main parts:

- “Prelude”, presenting the context in which the Reconstruction was conceived,

- “Process”, describing the process of decompilation itself,

- “Postgame”, discussing the initial release and its aftermath.

Prelude

What is ZZT?

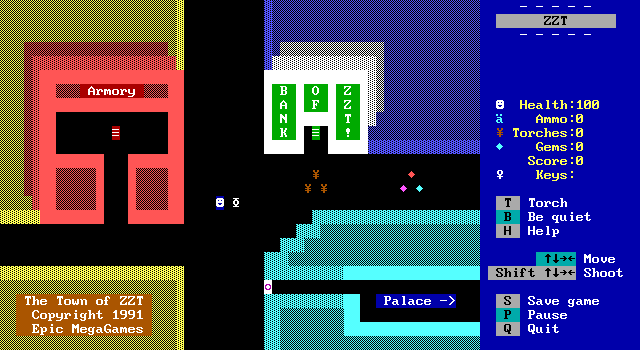

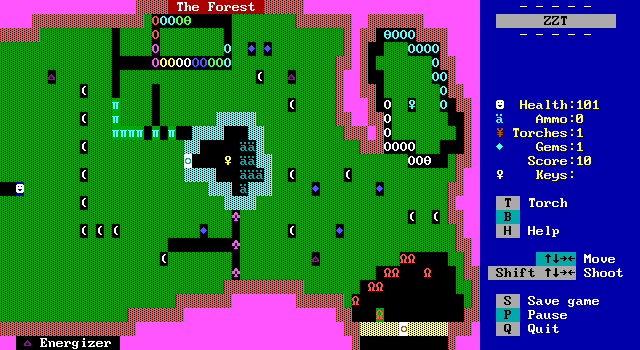

Quoting from the de facto archive of the ZZT community, the Museum of ZZT:

ZZT is a text-mode game from 1991 created by Tim Sweeney of Epic Games. ZZT has its own editor and scripting language which offers what may still be an unmatched level of accessibility to beginning game developers. […] ZZT’s simple ZZT-OOP scripting language gave many a friendly introduction to programming.

Despite its age, ZZT was not the first game creation tool - far from it! However, on top of offering a good balance of ease-of-use and capability, it was probably the first one to have a built-in editor available for free. The shareware version put no restrictions on editing, creating, and sharing new ZZT game worlds - the business model was solely in providing additional “official” game worlds. This caused its popularity to grow significantly. Quoting Tim Sweeney via Gamasutra:

With Kroz, […] the editor you had to pay money to get, so most people never got the editor or never saw it. So you didn’t have this sort of user community developing around the editor.

ZZT included the editor in the shareware version and everybody was able to do it whether or not they sent in money. That was a huge factor in it being successful, I think.

The game became a kind of “niche hit” of the BBS era, with its popularity continuing into the era of AOL and the early World Wide Web. As time went on, the community embarked on increasingly ambitious projects and collaborations. On top of that, new discoveries and tricks broke the boundaries of what was considered possible in the already-dated engine. While the popularity of ZZT waned from the mid-00s onwards, it has recently seen a kind of rebirth thanks to Dr_Dos’s work on documenting and showcasing classic game worlds, as well as creators - old and new - creating new content for it.

There are literally thousands of game worlds available for ZZT, plus a small but vibrant community working with it to this day! I highly recommend you check it out. The Museum of ZZT is a good place to start - you can even play it in the browser!

Binary outlook

With the last update to ZZT coming out in May 1992 (even though the last copy shipped in 2013 - I wish I owned one myself), there has been a lot of time to wish for change.

Many creators wanted to work with a more advanced game creation tool, albeit keeping a similar aesthetic. Most of those took on the form of new engines, the most famous of which was MegaZeux by Alexis Janson - a very notable ZZT game creator. It followed a very similar business model and attracted many seeking a “sequel” to ZZT. It was open-sourced in 1998, from where the community took over - new platforms, features and enhancements are being added to this day!

Some wished to see ZZT ported beyond DOS. However, even recently, the most popular solution remains emulating a DOS environment - usually via DOSBox. Nowadays, many use a dedicated emulator called Zeta engineered specifically with ZZT’s behaviour in mind, allowing workarounds for some of its quirks. While many attempts to reimplement the engine were made over the years, none reached full accuracy. Due to the engine’s long legacy, the community has adopted a significant amount of bugs and quirks as features, relying - accidentally or otherwise - on very minute details of its behaviour. As such, most of the community stuck with the original EXE.

Others simply hoped for patches fixing some bugs. While attempts to modify the binary itself, such as WiL’s ZZT 4.0/4.1, existed, they were considered unreliable and not recommended for non-experimental use.

The binary-only nature of ZZT restricted a lot of what could have been done with it in practice. Wouldn’t the best answer be to follow MegaZeux (and even Kroz!) and release ZZT as open source? … Well, about that - quoting Tim Sweeney:

Please don’t ask for the source; if I had it, I’d release it, but I lost it in a crash a long time ago.

So much for that, then - the dream scenario was not happening. Did that mean that nothing could be done at all, though?

The lack of source code availability has not stopped hobbyists and professionals alike from porting or enhancing games, usually via meticulous reverse-engineering. Many public decompilation efforts have been attempted, especially nowadays - some have even reached the point of byte-for-byte accuracy while targetting C and not just as a disassembly! Their existence implies that the same could be done for ZZT - especially that it is a smaller codebase than many of those efforts.

However, there was still something to consider. With how tight-knit ZZT’s modern scene is, I feared that working on such a project without permission would noticeably split the already tiny community. Many people would not feel comfortable developing projects and enhancements with an unclear copyright situation. With this in mind, I brought up the idea as a remark on Discord and shelved it for… most likely never… unless?

But what is there to lose, anyway?

Encouraged by many friends, I decided to try and ask Tim for permission in early February. At some point, I realized that if I’m not going to work on the project in an unofficial capacity, a rejection would not change my situation in any way.

Miraculously, he said yes! After some discussion, we agreed upon creating a decompilation of ZZT’s binary and releasing it under the MIT license had it reached completion. With permission in hand, I immediately got to work.

Process

Analysis

The first step was to examine what exactly I was about to work with.

zzt.zip, the last release of ZZT, comes with quite a few files - order forms, text files, game worlds. The two files related to the engine itself are:

ZZT.EXE, a 47,764-byte executable. It was common knowledge in the community that it was packed with LZEXE - a quick decompression yields a 96,080-byte executable, which is what I was going to manually decompile.ZZT.DAT, an (optional) file containing the built-in help documents - we’ll get back to it later,

In order to recreate the source code, I had to figure out what compiler and language were originally used. Helpfully, a note was present in ZZT 2.0 and 3.0’s help files:

The software was created with Turbo Pascal 5.0, an excellent programming tool from Borland International.

(In the end, it turned out to actually be Turbo Pascal 5.5. This was incredibly lucky, as that’s one of the versions officially made available free of charge by Borland!)

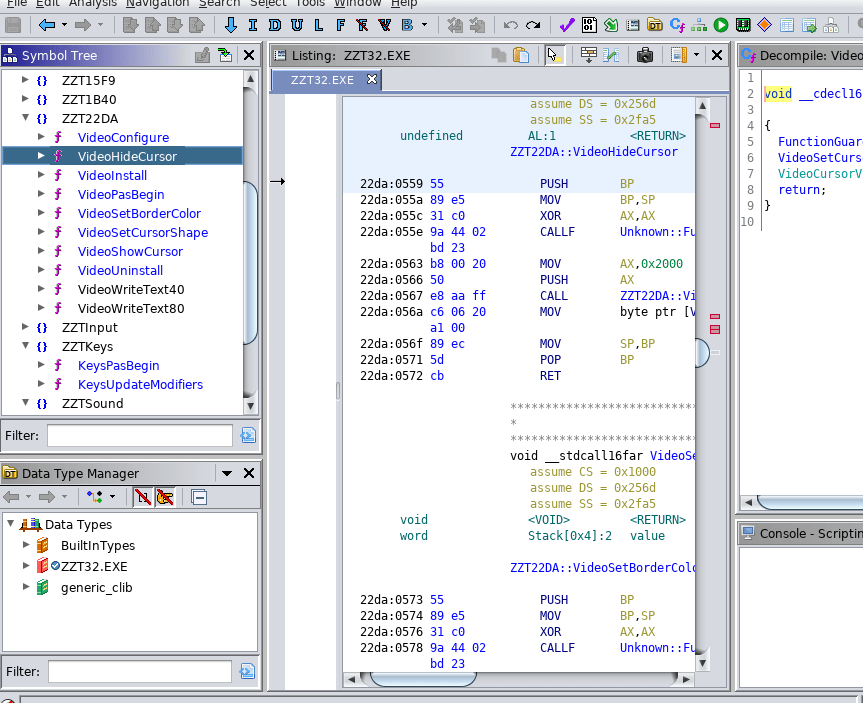

I also had to pick my reverse-engineering toolset. There are a few tools available for this purpose, and I examined the following:

- IDA Pro is perhaps the most popular choice - a mature, commercial reverse engineering suite created by Hex-Rays. However, not only was a license far beyond my budget, newer versions dropped support for “real mode” x86 code. I noticed that ScummVM has been granted permission to host the last “free” version which did support it, but by that point decided to look for alternatives.

- radare2 is a powerful open-source toolkit for reverse engineering. While it can do a lot, it has a steep learning curve and a command-line focus. I tried working with it, but ultimately sought other options.

- Ghidra is a relative newcomer (for most) - long used internally at the NSA, it was made available as free software in 2019. It comes with a capable decompiler, which would certainly help me gain a higher-level understanding of the code. On top of this, it also still supports “real mode” x86 code! In the end, this is what I chose.

Once the choices were made, I opened ZZT.EXE in Ghidra - but what I found was effectively gibberish, with more than one thousand detected segments and nonsensical code flow. It also only affected the compressed versions of ZZT - the first release, 2.0, which was not compressed, loaded perfectly fine. What was going on?

In the end, Bellard had the first laugh

I did eventually find the answer to this question, and its explanation requires a slightly deeper look into 16-bit x86 programming and the DOS .EXE file format.

The 8086 CPU operated on a segment-offset model. In order to expand the amount of memory visible to code beyond 64 kilobytes - the maximum amount addressable by a 16-bit register - additional 16-bit registers were added. They were called segments and allowed providing offsets for accessing code, data and stack information. They operated in units of 16 bytes, which allowed for increasing the width of addressable memory to 20 bits - 1 megabyte.

DOS programs initially used COM files, which just stored code but this caused a limitation of size to one segment’s worth (64 kilobytes). Conversely, EXE files were designed to be relocatable - loadable to multiple arbitrary memory segments. This goal was accomplished using a relocation table - a list of pointers to segment values stored in the code, which had to be adjusted after loading. The pointers themselves were stored using 4 bytes: two for the offset of the location and two for the segment it was stored in, both relative to the beginning of code.

Fabrice Bellard, the creator of LZEXE, noticed that this is inefficient:

- the pointers in a relocation table are usually stored sequentially,

- it does not actually matter if the segment-offset pair is the same, as long as it points to the same location in the code to be edited - the addressable memory is still limited to 20 bits, yet 32 bits (4 bytes) are used to store each pointer!

As such, the compressed EXE utilizes delta encoding for the relocation table, storing just the difference - in bytes - between consecutive pointer addresses. UNLZEXE, a popular decompression tool, always unpacks them in the same way - putting 16 of the 20 bits in the segment, and 4 in the offset. This creates addresses of the form “XXXX:000X”, thus creating a rather high number of segments!

As Ghidra trusts the relocation table to split code and data into their relevant segments, this throws it off and causes issues. (Interestingly, radare2 does not make this assumption and processes such an executable correctly.)

However, the pointers point to segments, right? I knew a relatively standard compiler was used, so I could trust the segment values there - and reconstruct the correct pointers in the relocation table. The solution I came up with was to write a small script, which:

- read all segment values pointed to by the relocation table,

- sorted them into a list,

- rewrote the unpacked relocation table so that all pointers were moved to the nearest known segment.

The script is available in the Reconstruction of ZZT repository as MISC/relocfix.py. It allowed recovering the segment information well enough for Ghidra to correctly read the file. Success!

Solving the puzzle

What I had in front of me now was a semi-organized blob of assembly code, complete with Ghidra’s (semi-)helpful decompilations. The next step - making sense of it! But how does one do that?

A good place to start was strings. Most were located next to the function they pertained to - and finding a piece of text like “Gems give you Health!” or “Video mode: C)olor, M)onochrome?” gave a pretty good insight into what the code in question could be doing.

From there, connections were made and internal structures slowly figured out. Existing documentation on the ZZT world format was quite helpful in this process, as the in-memory format turned out to actually be pretty much identical to the on-disk data!

After having a small percentage of the codebase figured out, I started working on the other half of the project - rewriting it into Turbo Pascal’s language. It took me a while to get up to speed, as the last time I had done anything in Pascal was in 2009. I was far from knowing everything I needed to, but I made sure to diligently mark any non-reversed spot with { TODO } in order to get back to them later. I really wanted to get something functional early, as experimenting with even partial code would allow me to get deeper insights into its real-world operation.

A helpful early observation was that in Turbo Pascal’s linking mode, each code segment corresponds to a single unit. With that in mind, I could figure out how many .PAS files I had to create and which functions belonged where. (Still had to name them, though.) There was only one segment for data, so I created a file called GAMEVARS.PAS which just contained all of them in the EXE file’s original order. Some were, however, moved to their respective units later. This was based on insight gained from locations of usage, as well as linking order, which was (thankfully!) determinstic.

Now, you might recall that Ghidra doesn’t decompile to Pascal - it decompiles to C. I ended up relying on both the decompiler output and original assembly - the former was a good way to observe the general flow of code, while the latter helped me figure out more specific things, like correct pointer locations and specific Pascal constructs used.

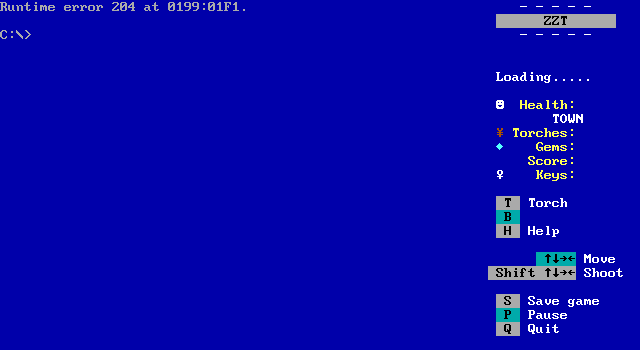

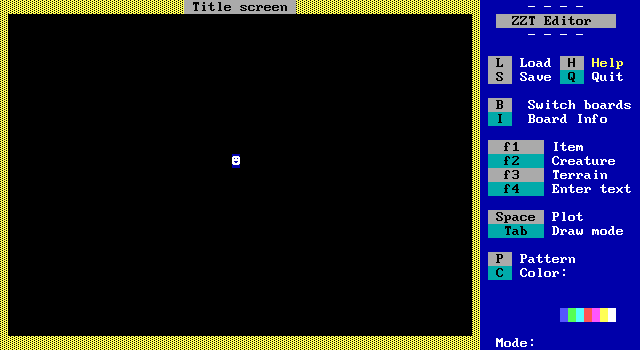

Eventually, I got the initial configuration prompt to work. From there, I continued to interleave making progress in understanding the binary and writing new chunks of the source, until I finally got this screenshot:

… Just kidding. The screenshot I’m talking about is this one, from February 14th:

The first true sign of it getting somewhere in a game world! I was excited and continued with the work, regularly hacking away on it. Just one day later, I managed to get much further:

However, it was obvious that the journey had only just started. The codebase was full of { TODO } markings and many parts simply didn’t behave right. Already, I knew this was clearly one of those projects where 20% of the work would end up taking 80% of the time.

Solving the puzzle, in broader strokes

The general plan I had in mind by now was as follows:

- Reverse engineer a sufficient part of

ZZT.EXEto have a general overview of the codebase. (Complete!) - Alternate between writing source code for the areas I mostly understood and using the resulting insight to figure out more of the codebase in Ghidra. (Ongoing!)

- Ensure that all functions have been transcribed.

- Ensure that no TODO comments remained in the source code.

- Get from here to a byte-identical EXE file, somehow?

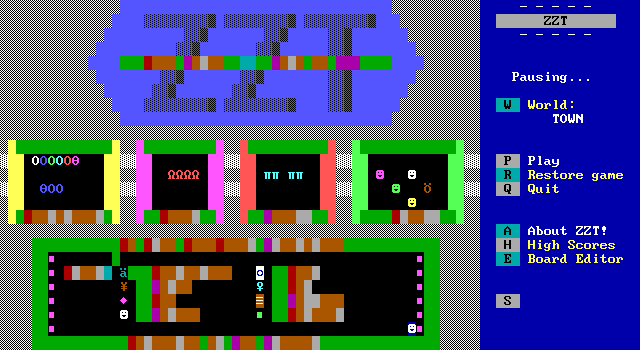

The work at this point was fairly methodical - by February 22nd, I managed to transcribe a large chunk of the game logic and moved on to doing the same with the editor:

To have an idea of where I was standing in terms of progress, I occasionally measured it with a “size of my compiled EXE, in KB/size of the original EXE, in KB” notation as follows:

- February 15th: 29.2/96

- February 21st: 40.0/96

- February 22nd: 52.0/96, a little more than halfway through!

In practice, Ghidra has proven itself to be a massive aid - and perhaps the reason it was possible at all - but it was not without flaws. Crucially, Ghidra’s real-mode support was just… lacking.

- The decompiler did not seem to understand segments very well. Manually hardcoding the correct values for each code area seemed to help a little, but referencing ASM was still required for quite a few areas.

- One of the procedures in

TXTWIND.PASwent across the 64K barrier, which seemed to cause the code flow logic to get very confused - it assumed that the code just… wrapped around? I mitigated this by copying the affected procedure somewhere else in memory and examining it from there, but it was a little annoying in other areas too, with function pointers also getting messed up in the process. - Something I only realized later is that Ghidra’s decompiler did not necessarily order if…else code segments in the order of the original assembly. Now, this makes sense for what it was made for, and I’m not blaming the tool here - but it did prove to be a mild annoyance when going back and making the result more accurate.

Keep in mind that the above-mentioned problems may have already been reported and/or worked on by now - for all I know, they may already be fixed. (I do recall seeing many issues and PRs pertaining to x86 real-mode support even as I was working on the Reconstruction!)

With time, I also got better at figuring out what x86 assembly instructions mapped to which Pascal constructs. It helps that Turbo Pascal’s name referred not to the speed of its output code, but the speed of its compilation. Its optimization techniques were nowhere near as sophisticated as modern compilers, while the resulting machine code was not far off, in a structural sense, from the original Pascal. (One of the more annoying fixes was when I found out that there’s a subtle difference in output assembly code when with is used to refer to a variable, as opposed to a pointer.)

Thanks to getting more accustomed to everything, as well as the excitement of getting more and more features checked off, progress picked up:

- February 22nd: 52.0/96

- February 24th: 62.0/96

- February 26th: 66.1/96

- February 27th: 68.0/96

- February 28th: 72.1/96

- Feburary 29th, morning: 74.1/96

- February 29th, evening: 89.3/96

Finally, on March 1st, I got where I wanted to be. 96/96. This means that the file sizes were (roughly) identical and all functions have been transcribed.

Of course, the files were not identical yet - neither in content nor in behaviour. That would have been too easy.

Adjustments, adjustments…

The next step was to adjust all functions to perfectly match the original ZZT.EXE - but how?

Instead of looking for dedicated executable code comparison tools - which I probably would have done had the original file not been relatively small - I decided to go for the least technologically advanced solution:

- open two instances of Ghidra,

- adjust them to the same location in code,

- use a tiling window manager to rapidly flip between two windows, using my eyes to find obvious differences in opcodes/code length, and using Page Down to make my way through the code.

(I’m going to hide behind the “if something is stupid, but works, then it is not stupid” defense.)

The main issue here was discovering many places where my assumed Pascal code was correct functionally, yet wrong literally. It sometimes took a fair amount of guessing to figure out what combination of higher-level code structures gave a specific end result!

By following this procedure for a few days (and marking verified areas with { CODEOK }), I got to a point where all functions had equal locations in both the recompiled and original .EXE, which also meant that they had matching lengths. I also adjusted the order and size of variables on the heap in a similar way. On March 6th, I decided to check if the built file had a matching hash…

It did not! Something still wasn’t quite right after all. Turns out that my visual processing is not perfect - about 40 bytes were different between the two .EXE files. The final approach was to use radiff2 to compare them. Most of the remaining problems were typos, flipped if/else conditions, and stack/heap size fields in the executable header. Finally, after another day of work, in the late hours of March 7th, I got the output I wanted to see:

6a7f8d7f60f33f43ca4b008c02ae436cb4025da259b5c73947580f6ddf06fadb ZZT.EXE

One hundred and twenty hours in, I managed to write a set of source code which built an identical copy of the ZZT binary to the one I was reverse-engineering. The tough part was finished.

Finishing touches

There was one more thing left to work on - the .DAT file. I could have probably gotten away with leaving it alone, as it hasn’t been given much attention in the community prior, but I decided to let my inner completionist guide me. (Also, I had already figured out the format from the parser in ZZT itself.)

ZZT.DAT stores a kind of “virtual file system” which ZZT can choose to read help files from - it replaced the same files being stored externally, as was the case in ZZT 2.0.

There were no hopes of a byte-for-byte reconstruction here. The .DAT file uses constant-length string fields for internal filenames, but - as they were never cleared in memory - bytes following the names themselves were random. However, I decided to make a tool to pack and unpack .DAT files regardless. This made the build system more complete, with all of the game’s data being editable.

(Trivia: Curiously, this wouldn’t be the first time someone modified the help file! However, all previous attempts simply put the new/modified help files in ZZT’s folder as-is, with the original file being hex-edited to change all filenames in it to unused ones.)

With this taken care of, all that remained is putting some finishing touches (renaming variables, tweaking code style) and settling on a release date. Unfortunately, I had picked a very unfortunate time for fans of settling on dates.

Postgame

The release

With COVID-19’s impact finally reaching Poland on March 4th, fear and uncertainty settled in very quickly. This made committing to any date rather difficult. Eventually, I decided to play it safe and I chose March 15th as the target date. This meant both that I could prepare better myself and that hype could be built for the upcoming announcement.

I wanted the release of the Reconstruction to feel like a big event - at least, as big as you can do in a niche community. However, I had to build up attention for the date while not revealing my cards. This caused me to refer to the event as “related to Zeta”, which was my ZZT-specific emulator. It was technically correct - one of the announcements included migrating Zeta from the GPL to MIT, to match the Reconstruction’s licensing. Fortunately, it seemed to work as planned, with few seeing through my plans!

Finally, on the evening of March 15th, a stream was held. Nervous and noticeably unprepared (as I put all of my experience points into a fancy countdown timer and none into an actual script), I started talking and slowly unraveled the reason for which I brought a few dozen people together.

Thankfully, things went better than expected.

<WorldsOfZZT> oh dang

<Spectre84> Well this looks nifty.

<StuartGipp> This is like the biggest ZZT news since… ZZT came out?

<ZinfandelZT> I’m… I’m gonna cry

And just like that, the project has been unveiled and released.

Good future

I’m happy to say that the Reconstruction of ZZT achieved its goal of opening up new pathways of porting and modification! If you want a summary, Dr_Dos wrote a great article about the first month since its release, and I highly recommend you check it out as a supplement to this one.

One of the more interesting releases made possible by it not mentioned in the article was Variety, a puzzle game by the ZZT legend WiL, utilizing a modified executable as an opt-in experience enhancement.

There are also projects I have pursued myself which are based on the Reconstruction, but admittedly I’m going to have to end this blog post somewhere. Maybe next time…

Bonus: Super ZZT

My inner completionist wasn’t done yet, though. There was one more game left to take on, after all.

Super ZZT was a successor to ZZT released in October 1991. It featured new built-in objects and mechanics, as well as large, scrolling worlds. Unfortunately, it lacked one thing: an editor. It was technically there, hidden behind a command-line argument, but that alone made it significantly less approachable. As such, it only ever gained a fraction of its predecessor’s popularity.

However, that didn’t stop me! The process was more-or-less identical to how I had approached ZZT, so I’m not going to get into details. Similarities between ZZT and Super ZZT’s codebases in addition to my prior experience meant that work progressed much smoother and faster, however. The end result was similarly released as the Reconstruction of Super ZZT a month later.

This, finally, marked the end of my small journey. Around the same time, Mr_Alert did a stellar job reverse-engineering the changes between each version of ZZT, which are also available under the very same MIT license.

Final thoughts

I’d like to finish this post by greeting a few community members who have directly influenced the creation of the Reconstruction:

- Dr_Dos, who spent years trying to rekindle interest in the once-abandoned game creation system - and succeeded! In a sense, it seems like the Museum of ZZT has gone from an archival project to a self-defeating prophecy,

- kkairos, whose support helped push the project from idea to completion,

- and, of course, Tim Sweeney himself! I mean, there wouldn’t be anything to reconstruct without ZZT! …Right?

It’s fascinating to observe just how long-lived the legacy of this quirky little executable is. With new games and projects popping up left and right, I’m really excited to see what the future holds for ZZT as it enters its fourth decade of existence.